The Prophet of the "Dead End": Why Yann LeCun's Departure From Meta Is the Only AI Story That Matters.

LeCun is warning of architectural obsolescence. He’s saying that companies built entirely on LLM scaling may face the same fate as buggy-whip manufacturers in 1910.

Yann LeCun just left Meta.

Let that sink in. This isn’t just another C-suite executive changing LinkedIn bios. This isn’t a quiet retirement or a golden-handshake shuffle.

This is Yann LeCun!

The man who, alongside Geoffrey Hinton and Yoshua Bengio, forms the holy trinity of modern artificial intelligence. The Turing Award winner. The “Godfather” who literally wrote the book on convolutional neural networks, the technology that gave computers sight and powers your phone’s camera.

For the last decade, he has been the Chief AI Scientist at Meta, the intellectual and moral center of an R&D effort backed by a firehose of cash so vast it defies comprehension—tens of billions of dollars per year, all aimed at winning the AI race.

And he’s gone.

He didn’t go to Google. He didn’t go to OpenAI. He’s not cashing out to a venture capital firm.

He left to found a startup. A startup dedicated to building a technology that Meta, in its infinite wisdom and with its $100-billion-plus R&D budget, apparently doesn’t prioritize.

This move is more than just a headline. It’s a seismic tremor, and the epicenter is located directly beneath the entire foundation of the current AI boom. This matters more than most people realize, and you need to understand why.

The Great Flip: Why the World is Pivoting from CBDCs to Stablecoins.

The line between “crypto” and “real finance” is now officially, irreversibly blurry.

The Man Who Has Been Right for 40 Years

To grasp the magnitude of this event, you must first understand who Yann LeCun is. Yann LeCun is not just an expert; he is a prophet of the contrarian, a man whose entire career is a monument to being early, being ridiculed, and being proven catastrophically right.

In the 1980s, LeCun was a young researcher in Paris obsessed with a fringe idea: building “neural networks” that could learn, inspired by the human brain. He couldn’t find a PhD adviser. The academic consensus was that this entire field, known then as “machine learning,” was a dead end. It was a career-killer, a computational fantasy. The field was in the depths of an “AI winter,” and LeCun was wandering in the snow.

He pursued it anyway. Three decades later, that “dead end” became the foundation of everything. It is the engine behind modern translation, medical imaging, self-driving cars, and the entire generative AI revolution.

LeCun has spent 40 years being early and being right, often in the face of overwhelming consensus to the contrary. He is the last person you bet against.

And now, he is doing it again. He’s standing in the middle of the world’s biggest gold rush, a frenzy built on Large Language Models (LLMs)—the technology behind ChatGPT, Gemini, and Meta’s own Llama—and he is calmly, publicly, and repeatedly calling it another dead end.

“Nobody in their right mind would use LLMs of the type that we have today” within three to five years, he stated plainly at an event at MIT last month.

He’s not hedging. He’s not qualifying. He’s drawing a line in the sand.

The Cat is Smarter Than the Supercomputer

LeCun’s critique isn’t a minor technical quibble. It is a fundamental indictment of the entire LLM paradigm.

Current AI, he argues, is built on a brittle foundation. LLMs, at their core, are text-prediction engines. They are master synthesizers of the data they’ve been fed, which is, to be fair, nearly the entire internet. They work by statistically calculating the most probable next word in a sequence.

This is a powerful illusion. It can write a sonnet, a legal brief, or a Python script. But as LeCun tirelessly points out, it understands none of it.

An LLM does not know that objects exist when you’re not looking at them (object permanence). It has no intuitive grasp of physics; it can describe gravity because it has read the textbook description thousands of times, but it doesn’t know that if you let go of a pen, it will fall. It has no causal understanding of the world. It is, in his own words, a system with “the intelligence of a cat.”

And then, in a devastating intellectual backhand, he adds that the cat is probably smarter.

Why? Because a house cat, with its walnut-sized brain, possesses something the world’s most advanced, multi-billion-dollar supercomputers do not: a world model.

A cat understands physics. It intuitively calculates trajectory, friction, and velocity to land on a narrow fence. It understands object permanence; it knows the mouse is still behind the wall. It understands cause and effect; it plans, it predicts, and it operates in a 3D world with genuine, if limited, reasoning.

LLMs, LeCun argues, are disembodied mimics. They are auto-completing on a cosmic scale. He believes that no amount of scaling—making the models bigger, feeding them more text, and burning more of the planet’s energy—will ever bridge the gap from text prediction to genuine intelligence. You cannot get to AGI (Artificial General Intelligence) from here.

The Alternative: Building a “World Model”

This is not just academic criticism. This is the entire thesis of his new company.

LeCun is leaving the comfort of his Meta throne to build his alternative: world models.

His vision is to create AI systems that learn not from static text but from high-bandwidth, dynamic, sensory data—specifically, video. He wants to build systems that learn the way a human infant does: by observing, interacting, and building an internal, causal model of how the world works.

A child learns physics not by reading Wikipedia, but by dropping a spoon from a high chair thousands of times, watching it fall, and hearing it clatter. That child is building a predictive, causal model of reality. That, LeCun insists, is the path to real intelligence.

His goal is to build an AI that can watch a video and not just label the objects (”car,” “street,” “person”) but understand the scene. It would know that the car is a solid object, that the person is in front of it, and that if the car moves forward, the person is in danger. It’s about moving from “what” to “why” and “what if.”

This is a radically different and exponentially harder problem than what LLMs are solving. And it’s what he’s staking the final act of his career on.

The Meta Paradox and the Bet

This brings us to the terrifying cognitive dissonance at the heart of Meta.

For the past two years, Meta has been spending countless billions of dollars and aligning its entire corporate strategy around a technology—LLMs (Llama)—that its own Chief AI Scientist, its most prized and decorated researcher, has been publicly and relentlessly calling a dead end.

Think about that. It’s an absurdity. Imagine if Nike’s chief shoe designer announced that sneakers were a “fad” and that everyone would be back in leather oxfords in three years, all while the company was spending $20 billion on a new “Air” factory.

This leaves us with only two possibilities, both of which are deeply troubling.

Possibility 1: Yann LeCun is wrong.

It’s possible. Even geniuses sometimes miss. His 40-year track record is immaculate, but maybe this time, he’s the intellectually stuck one. Maybe scaling LLMs will work. Maybe “more is different,” and at some unfathomable scale, Llama-10 will spontaneously “wake up” and understand physics. Perhaps LeCun, the original brain-inspired pioneer, is ironically the one who can’t see the magic of a new, emergent paradigm.

Possibility 2: Meta is knowingly chasing a dead end.

This is the far more frightening and more likely scenario. In this version, Meta’s leadership knows (or at least suspects) LeCun is right. They understand the deep architectural limitations of LLMs. But they are trapped.

They are trapped in a market narrative, a frantic race with Google, Microsoft, and OpenAI. They must have a ChatGPT competitor because the market, the press, and their shareholders demand it. To publicly pivot and say, “Actually, our top scientist thinks this is all nonsense, and we’re going to spend five years quietly researching his video-based world models,” would be corporate suicide. The stock would evaporate overnight.

So, they are forced to chase the market narrative over the technical reality. They are forced to pour billions into a technology their own prophet has disavowed.

Yann LeCun’s departure is his answer.

He’s done talking. He’s done being the internal voice of dissent, the polite academic at the corporate table. He has concluded that the organization is incapable of making the necessary pivot.

His leaving is not a resignation. It is a vote of no confidence. It is a tangible, multi-billion-dollar bet that his own employer is fundamentally, strategically, and architecturally wrong.

The Coming “Architectural Obsolescence”

If LeCun’s 3-5 year timeline holds, the implications are staggering. We are not just in an “AI bubble”; we are in the midst of building the world’s most expensive and complex Tower of Babel, and the architect just walked off the job, warning that the foundations are sand.

Trillions of dollars in market capitalization—from Nvidia’s silicon to the entire ecosystem of “GPT-wrapper” startups—are predicated on the single belief that scaling LLMs is the path to the future.

LeCun is warning of architectural obsolescence.

He’s saying that companies built entirely on LLM scaling may face the same fate as buggy-whip manufacturers in 1910. It doesn’t matter how good your buggy whip is, or how much data you use to optimize its aerodynamics. The world has moved on to the internal combustion engine.

LeCun’s new venture is him walking out of the buggy-whip factory, grabbing a wrench, and heading to Henry Ford’s garage.

This is not a guarantee. Even geniuses can be wrong. LeCun’s world-model approach may prove to be computationally intractable, a 50-year problem, not a 5-year one. LLMs, for all their deep flaws, might be the “good enough” technology that dominates the next decade, just as fossil fuels, for all their flaws, powered the 20th century.

But Yann LeCun has never been wrong about something this big before.

He was right about neural networks when they were a joke. He was right about deep learning when it was a fantasy. Now, he is placing the single biggest bet of his life. He is betting his reputation, his legacy, and the final, most productive years of his career on one, profound, terrifying idea: The AI revolution we think is happening isn’t the real one. It’s a false spring. It’s a shadow-play on the cave wall. And he’s just left the cave, on his own, to go find the fire.

The Ouroboros Engine: Why the Internet is Eating Itself Alive.

There is a feeling you get scrolling, a digital nausea you can’t quite place. It’s a sense of unsettling sameness, a whisper of déjà vu in every listicle, every corporate blog post, every blandly helpful answer. The internet, once a chaotic, sprawling, and unmistakably human metropolis, now feels different. It feels sterile. Repetitive. Thin.

The Turbine Age: When AI Devours the World and Feeds on Jet Engines.

The image is straight out of a cyberpunk novel. An anonymous warehouse, somewhere in the vast American industrial sprawl. Inside, not rows of servers, but the humming heart of a former Boeing 747. The shrieking cry of its turbine no longer tears through the skies; on the ground, it feeds the insatiable appetite of artificial intelligence.

The Unprecedented Crisis: Why the Federal Reserve Is Facing Its Own Extinction.

The Fed is now confronting a seismic shift, a structural break unlike anything in its 110-year history. The twin pillars of its mandate are about to be shattered.

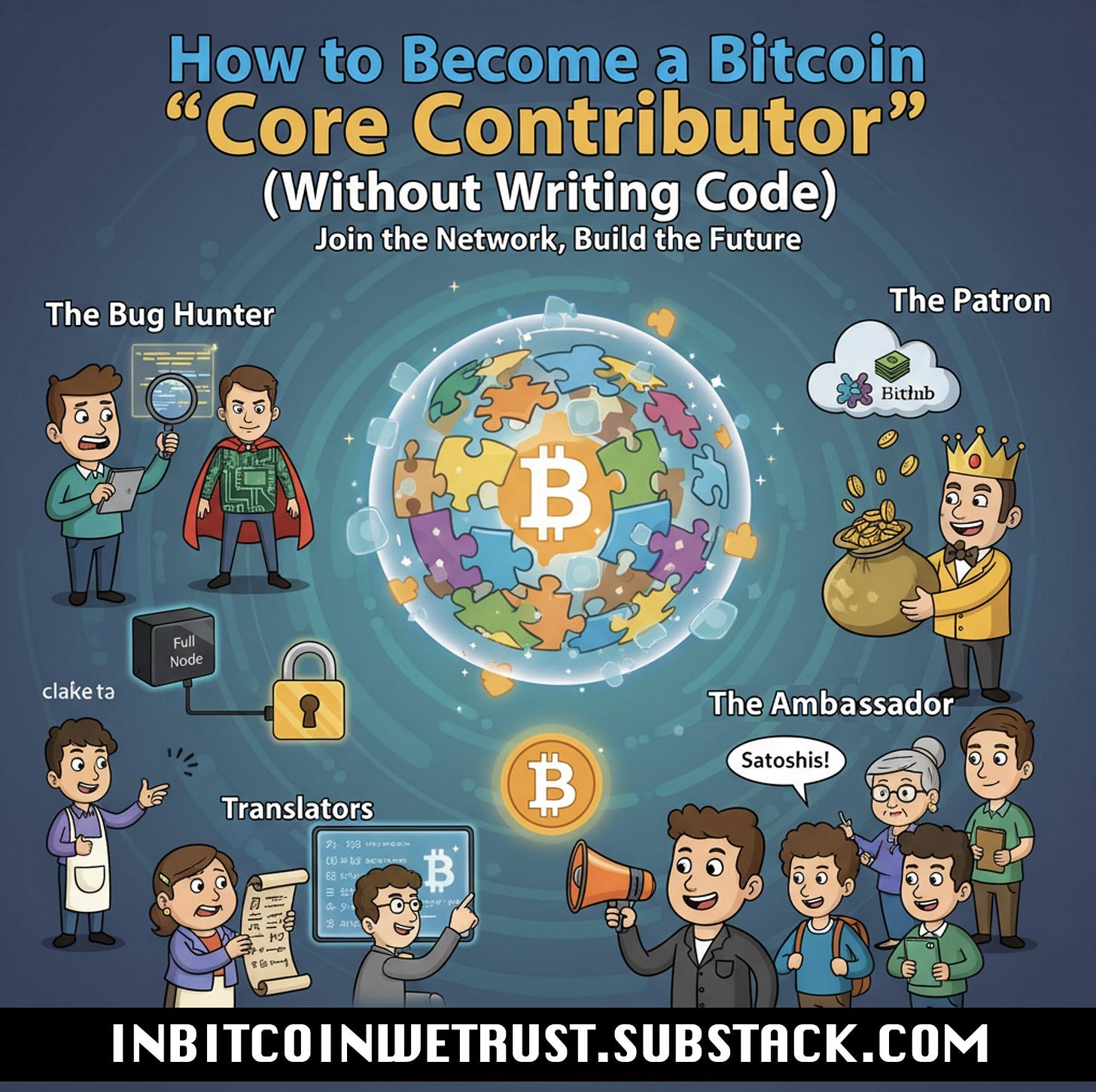

How to Become a Bitcoin “Core Contributor” (Without Writing Code).

Stop thinking of “Core” as an exclusive title you can’t earn. Start thinking of it as a function you can perform. Pick your role. The Bitcoin network needs you.

The comparson to buggy-whip manufacturers is chillingly apt. If LeCun's track record tells us anything, it's that betting against him on fundamental architecture shifts has been a losing strategy for 40 years. The world model approach makes intuitve sense when you think about how actual learning happens. Meta's position must be incredibly uncomfortable right now, billions deep into a techology their own prophet just called obsolete.