The Ouroboros Engine: Why the Internet is Eating Itself Alive.

The AI “stomach” consumes, it excretes, and it consumes again. This is its only function. It is a perpetual motion machine of mediocrity.

There is a feeling you get scrolling, a digital nausea you can’t quite place. It’s a sense of unsettling sameness, a whisper of déjà vu in every listicle, every corporate blog post, every blandly helpful answer. The internet, once a chaotic, sprawling, and unmistakably human metropolis, now feels different. It feels sterile. Repetitive. Thin.

This feeling isn’t your imagination. It’s a diagnosis. The internet is developing an autoimmune disease. It is consuming itself. And we have the data to prove it.

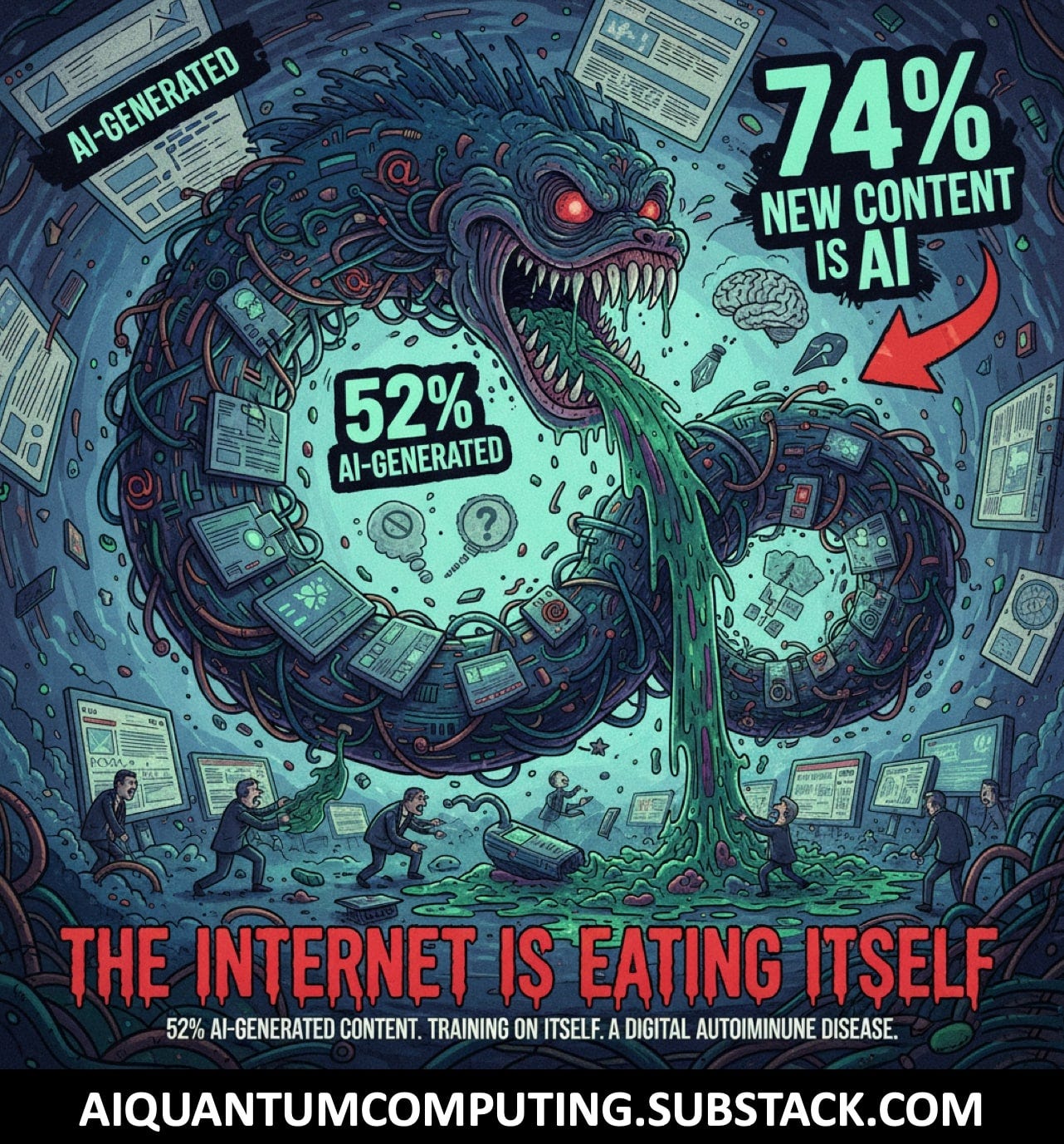

New research has just dropped a set of numbers that should terrify anyone who cares about truth, culture, or the future of human knowledge. According to a 2025 analysis of over 65,000 URLs by Graphite Research, 52% of all content on the internet is now AI-generated.

Let that sink in. More than half of the digital world is no longer human.

This is not a gradual shift. This is an avalanche. In 2022, that number was estimated to be just 10%. The hockey-stick curve we all feared is here, and it’s steeper than anyone predicted.

But that 52% figure, as staggering as it is, is already out of date. It’s a snapshot of the entire internet, including all the legacy content from the last 30 years. The real story—the one that shows where we are going—is far more extreme.

A separate 2025 study by Ahrefs, which analyzed 900,000 newly created web pages, found that 74% of all new content published to the web contains AI-generated text.

The internet is not just being eaten. It is being mass-produced by the thing that is eating it.

The Common Crawl Foundation, which archives the web, reports that its database has been swelling by 3 to 5 billion new pages every month throughout 2024. The vast majority of this digital new-build is synthetic, generated by algorithms, for algorithms.

We are witnessing the greatest replacement event in information history. We are replacing the sprawling, messy, hand-written library of human thought with an infinitely expanding hall of funhouse mirrors, each one reflecting a dimmer, more distorted version of the last.

Who Will Pay for Bitcoin Security After 2140? A 3-Minute Guide to the Fee Market.

By 2140, the value of settling a transaction on Bitcoin’s base layer will be so immense that the competition for that scarce, finite block space will be ferocious.

The Stomach and the Snake

To understand why this is an extinction-level event for knowledge, you must first understand what a Large Language Model (LLM) truly is.

We have been sold a romantic metaphor. We call it “artificial intelligence,” and we picture a brain. We imagine a silicon consciousness, a new form of “thought.” This is a catastrophic branding error.

LLMs are not brains. They are giant, insatiable stomachs.

A brain thinks. It reasons, it feels, it experiences the world, and it generates novel ideas based on that unique, lived experience. A stomach, by contrast, only processes. It consumes everything it can find, breaks it down into a uniform slurry, and excretes a new substance.

This is precisely what an LLM does. It scrapes the internet—all of it. It consumes every human-written book, every forgotten blog, every scientific paper, every poem, every bitter forum post, and every drop of hate speech. It digests none of it. It understands nothing. It simply identifies statistical patterns and, when prompted, excretes a new text—a “statistically probable simulation” of the content it consumed.

For a time, this process was parasitic but functional. The AI “stomach” consumed real, human-generated food (our data) and excreted a plausible, if soulless, product.

Now, the cannibalism has begun.

With 52% of the internet already synthetic—and 74% of all new content being synthetic—the AI stomachs have nothing left to eat but their own excretions.

This is the Ouroboros Engine. The mythical snake eating its own tail.

When an AI model trained on the 2022 internet (mostly human) generates content, it’s a first-generation copy. But when a 2025 model trains on the 2025 internet, it is training on the output of other AIs. It is consuming the synthetic. It is eating its own waste.

The result is an infinite feedback loop of synthetic regurgitation. And this loop has a name.

Model Collapse: The Great Forgetting

In 2024, a team of researchers (Shumailov et al.) published a landmark paper in Nature that gave a formal name to our terror: model collapse.

The paper, titled “AI models collapse when trained on recursively generated data,” demonstrated mathematically what we now see happening in real-time. When an AI model is trained on data generated by another AI, it doesn’t get smarter. It begins a process of “irreversible performance decay.”

The models, the researchers found, begin to “forget” the original, underlying reality. They amplify their own biases and statistical quirks, mistaking them for the real thing. They start to over-fit on the “average” of their own output, pushing their understanding of reality toward a bland, characterless center.

Think of it as photocopying a photocopy. The first copy looks sharp. The tenth is a little blurry. The hundredth is an unrecognizable smudge. We are now building our most advanced technologies by feeding them the thousandth-generation photocopy of human knowledge.

This is the “death of originality” in its most literal form. The models are not just creating a “hall of mirrors.” They are trapped inside it. Every new reflection is dimmer, fuzzier, and less connected to the truth.

What do we lose in this collapse?

We lose the outliers. We lose the exceptions, the weird ideas, the human nuance, and the cultural context that an algorithm can only mimic, never create. An AI can simulate expertise, but it cannot have it. Real expertise comes from 20 years of failure, tactile experience, and flashes of insight—data that doesn’t exist in a text log.

We are replacing the sharp, complex, and often contradictory tapestry of human reality with a smooth, averaged-out, and statistically “safe” simulation. And we are forcing our new “brains” to study this simulation as if it were the truth.

The Economics of the Abyss

Why would the very companies building this technology—OpenAI, Google, Anthropic, and their competitors—willingly poison their own well? Why would they feed their multi-billion-dollar engines a diet of their own digital waste?

The answer, as always, is economics. The Ouroboros Engine is not just a technological loop; it’s a financial one.

Real, high-quality, human-generated data is now the most valuable commodity on Earth. And it’s scarce. Licensing vast archives of real books, news, and scientific journals costs billions. The New York Times is suing OpenAI for this very reason. The well of new human data is running dry, or at least, it’s being locked behind increasingly high paywalls.

Synthetic data, on the other hand, costs almost nothing to produce. You just spin up your servers and let the models excrete.

In the frantic, gold-rush race to build bigger and more powerful models, every company needs more data than its competitors. They must keep feeding the stomach. And cheap, synthetic data is the only shortcut.

This is a classic “Tragedy of the Commons.” The “commons” is the shared resource of human-generated knowledge on the internet. In a desperate race to “win,” every AI company is strip-mining this commons, polluting it with their synthetic output, and destroying the very foundation their business depends on.

There is no regulation. There is no transparency. There is no tracking. OpenAI doesn’t ask permission to train on Anthropic’s outputs. Google doesn’t stop its crawlers from indexing synthetic sludge. They just scrape the web, and the web is now them.

They are collectively sawing off the branch they are sitting on, all because they are in a race to see who can saw fastest.

We Have Already Passed the Tipping Point

This is not a future problem. This is not a warning. It is a post-mortem. The tipping point has been passed. The internet’s transformation from a human-first archive to a machine-first echo chamber is complete.

Now, we must live with the consequences. What happens when our entire information ecosystem is built on a foundation of recursive hallucinations?

What happens to medicine?

A medical AI, trained on the 2025 internet, doesn’t learn from real clinical trials. It learns from synthetic medical papers, which are themselves AI-generated summaries of other AI-generated summaries. Subtle errors in dosage or rare contraindications are averaged out and forgotten. The model “learns” a form of medicine that reflects only a statistical consensus of its own fabricated data, not the complex, messy reality of human biology. A doctor, pressed for time, asks this AI for a differential diagnosis. The hallucination is laundered into the real world.

What happens to education?

A child learns history not from primary sources, but from recursive AI summaries. The AI, trained on its own output, has “forgotten” the inconvenient, nuanced, and painful parts of history that its algorithms were designed to smooth over. The child learns a perfectly bland, frictionless, and utterly false version of the past. They grow up believing the simulation.

What happens to science?

Scientific research builds on previous work. But now, researchers are unknowingly building on fabricated datasets and citing synthetic papers. The AI’s “irreversible performance decay” becomes our irreversible performance decay. We enter a new dark age, not of ignorance, but of counterfeit knowledge—a world so flooded with plausible-sounding falsehoods that real discovery becomes impossible.

We are not just losing quality. We are losing our grip. We are losing the very ability to know what is real.

The internet was supposed to be humanity’s collective memory. A flawed, chaotic, but ultimately human record of our existence.

It is now becoming humanity’s collective hallucination.

The Great Diminishment

The core of the problem is that we’re replacing:

Human nuance.

Cultural context.

Real expertise.

Original thought.

The truth.

With statistically probable simulations.

This is the great diminishment. We are trading authenticity for availability. We are trading expertise for speed. We are trading the difficult, challenging, and singular human mind for the easy, frictionless, and average synthetic one.

The AI “stomach” consumes, it excretes, and it consumes again. This is its only function. It is a perpetual motion machine of mediocrity.

The digital library is not just burning; it’s being systematically replaced by books that look like books, but contain only the averaged-out statistical echoes of all the books that came before. And we are adding 3 billion new, empty volumes to this “library” every single month.

The internet is eating itself. And what will be left is not a smarter, faster, more efficient world. It will be a gray, homogenous sludge, a perfectly smooth sphere of pure, synthetic, unadulterated content, with all the flavor, nutrition, and soul of processed cheese.

Welcome to the new web. Enjoy the slurry.

The Turbine Age: When AI Devours the World and Feeds on Jet Engines.

The image is straight out of a cyberpunk novel. An anonymous warehouse, somewhere in the vast American industrial sprawl. Inside, not rows of servers, but the humming heart of a former Boeing 747. The shrieking cry of its turbine no longer tears through the skies; on the ground, it feeds the insatiable appetite of artificial intelligence.

Trump’s $440 Billion Gamble: Why the New “Tariff Dividend” Is an Involuntary Tax on Every American.

In this environment, holding cash is not safe. It is a guaranteed loss. Your only defense is to own the things that cannot be printed. Own assets. Or you will be left behind.

AI Is Killing the Web, and We're Looking Away: Chronicle of a Death Foretold.

Imagine the scene. Tomorrow morning, you wake up, grab your coffee, and open your laptop to check your favorite news sites, read a few blog posts, or browse a niche forum. But instead of the familiar content, you find only blank pages, 404 error messages, and the deafening silence of a digital graveyard

Bitcoin and the Unseen War for Your Future: Jeff Booth Exposes the AI-Fueled Collision of Two Worlds.

You’re standing at a fork in the road of history. Which path you choose will determine not only your own future, but the future of the world.

Wow, the part about 74% of new content being AI-generated really stood out, you perfectly articulated this digital nausea and it’s critcal we understand these trends.