Quantum Computing: The Caltech Processor That Is Redefining the Future.

It is a giant leap, a paradigm shift that redraws the roadmap toward the universal quantum computer.

A new threshold has been crossed, a boundary pushed back with the kind of boldness that defines major technological revolutions. In the fascinating and complex world of quantum computing, a team of physicists from the California Institute of Technology (Caltech) has just shattered all existing records. Their achievement? The creation of an experimental quantum processor equipped with 6,100 qubits, a figure that spectacularly eclipses previous accomplishments, which topped out at around a thousand qubits. This advance, published in the prestigious journal Nature last September 24th, is not merely an incremental improvement; it is a giant leap, a paradigm shift that redraws the roadmap toward the universal quantum computer.

The field has never experienced such a surge of activity. Between Google’s Willow chip, Microsoft’s Majorana 1 project, IBM’s advances, and initiatives like QuNorth, the race for quantum supremacy is in full swing. Yet, this announcement from Caltech stands out for its scale and for the elegant solution it brings to the discipline’s most fundamental problem. By far surpassing the prototypes of industry giants, these researchers are not just winning a round; they are demonstrating the viability of an architecture that could very well become the standard for years to come.

The King is Dead: How Nvidia Built an Empire on the Ashes of Moore's Law.

The king is dead. Long live the king.

The Quantum Challenge: Building a House of Cards in a Storm

To grasp the significance of this feat, it is essential to understand the fundamental obstacle that stands in the way of quantum engineers: the extreme fragility of qubits. The qubit, or quantum bit, is the basic unit of information in quantum computing. Unlike the classical bit in our computers, which can only take on two values, 0 or 1, the qubit possesses a near-magical property derived from the laws of quantum mechanics: superposition.

Thanks to this principle, a qubit can exist in an infinite combination of 0 and 1 states simultaneously, represented by a spectrum of probabilities. It’s as if, instead of a coin that can only be heads or tails, you had a perpetually spinning coin, containing both possibilities within itself at the same time. This ability to explore a multitude of states in parallel is what gives quantum computers their exponential computing potential, capable of solving in minutes problems that would take the world’s most powerful supercomputers billions of years.

But this power comes at an exorbitant cost: instability. A qubit’s state of superposition is incredibly delicate. The slightest external disturbance—a tiny mechanical vibration, a temperature fluctuation of a thousandth of a degree, a stray electromagnetic field—can break this fragile state and cause what physicists call decoherence. The calculation instantly collapses, and the information is lost forever. Trying to build a quantum computer is like trying to build an intricate house of cards in the middle of a constant draft.

Faced with this colossal challenge, the preferred strategy is redundancy. By increasing the number of physical qubits, a large portion can be dedicated to quantum error correction mechanisms. If some “computational” qubits lose their coherence, others, acting as a safety net, can detect and correct the error, thus preserving the integrity of the overall calculation. To return to our analogy, it’s like building an immense house of cards but adding supports and buttresses (the verification qubits) to ensure that the fall of a single card does not bring down the entire structure. It is precisely in this area that the Caltech team has made its masterstroke.

The Caltech Breakthrough: The Mastery of Neutral Atoms

The secret to Caltech’s success lies in its choice of an architecture that is both bold and remarkably effective: neutral atoms. Their system is built from cesium atoms, individual particles that serve as qubits. To isolate them from the noise of the outside world, these atoms are first cooled to temperatures near absolute zero, then trapped in a near-perfect vacuum chamber.

There, cutting-edge technology comes into play: extremely precise laser beams, acting as “optical tweezers,” grab and hold each atom. This grid of light allows for the positioning of thousands of qubits with unprecedented stability and control. The major advantage of this approach, compared to other popular architectures like superconducting circuits (favored by Google and IBM) or ion traps, is its scalability. Increasing the number of qubits in a superconducting system, for example, exponentially complicates the wiring and increases thermal “noise,” making the system increasingly unstable. The neutral atom architecture, however, allows the grid to be expanded to thousands of units without the system losing coherence.

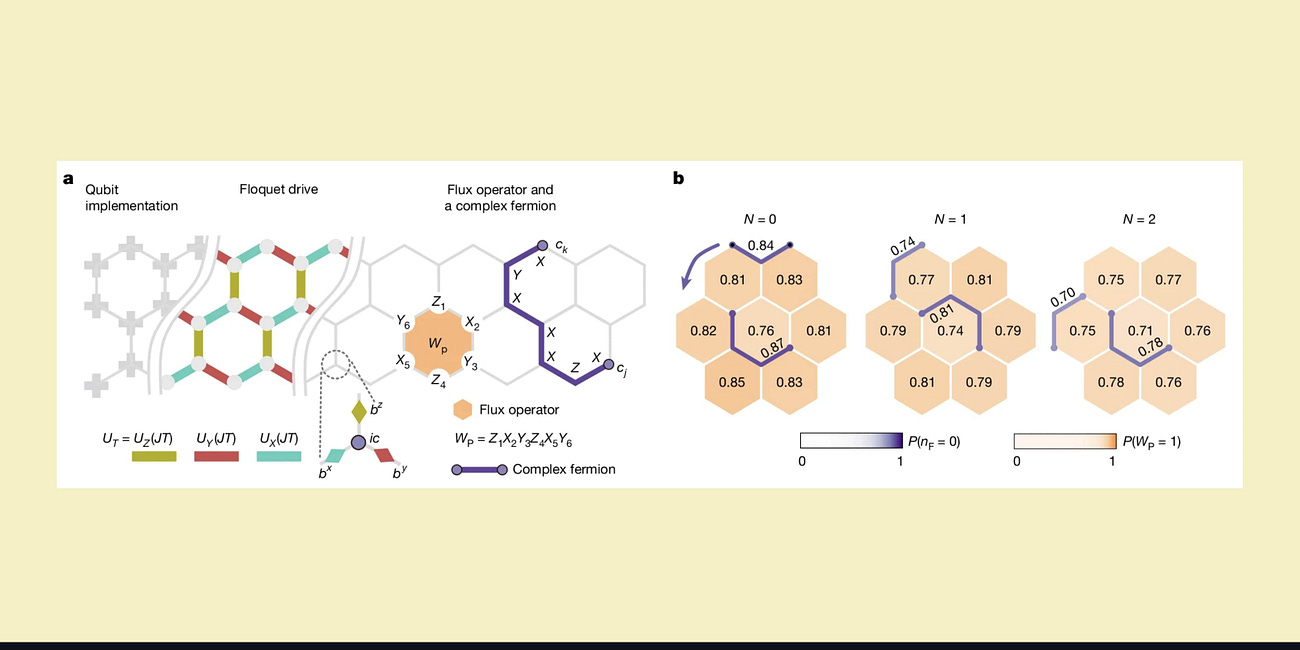

The results obtained by the Caltech team are simply stunning and are measured along three crucial axes:

Quantity: With 6,100 qubits, they have not only crossed a symbolic threshold but have also reached a scale where complex error-correction schemes become conceivable.

Stability: Their qubits remained in a coherent superposition state for nearly 13 seconds. This may seem short, but it is an eternity on the quantum scale, almost ten times longer than previous records. This extra time is fundamental, as it provides a much wider window to perform complex computational operations before decoherence occurs.

Quality: The control of each qubit was achieved with near-perfect fidelity, boasting a success rate of 99.98%. This means that for every 10,000 operations requested, 9,998 yield exactly the expected result. This precision is the cornerstone of reliability, without which a large number of qubits would be useless.

This triple success shatters a trade-off that seemed inevitable in quantum computing: sacrificing quality for quantity. Gyohei Nomura, one of the lead authors of the study, perfectly summarizes this breakthrough: “The more atoms you handle, the greater the risk of errors... and yet we prove that you can gain in quantity without losing quality.” His colleague, Manuel Endres, adds with palpable optimism: “This is an exciting moment for neutral atom quantum computing. We now see clearly how to build large, error-corrected quantum computers. The foundations have been laid.”

From Quantum Memory to Universal Calculator

It is important to remain measured: the Caltech processor will not dethrone classical supercomputers tomorrow. For now, it is an exceptional prototype, a “proof of concept” that validates the power of its architecture, but it is not yet configured for solving large-scale mathematical problems. However, it demonstrates that it is possible to reconcile a very large number of qubits with rock-solid reliability—two criteria long considered incompatible.

To transform this device from promise to performance, the next step is crucial: mastering and exploiting quantum entanglement on a large scale. Entanglement is a phenomenon where two or more qubits become so intimately linked that they share the same fate, regardless of the distance separating them. Measuring the state of one entangled qubit instantly influences the state of the other. It is this property that allows for the creation of quantum logic gates and makes the qubits work together collectively.

Currently, the Caltech machine is comparable to a quantum hard drive with a phenomenal capacity: it can store a massive amount of quantum information stably. Entanglement will transform it into a true central processing unit (CPU), capable of executing complex algorithms to unlock challenges that are currently beyond our reach.

The potential applications are staggering and touch every sector of science and industry:

In medicine, simulating the behavior of billions of molecules to design new drugs for cancer or Alzheimer’s disease in a matter of days.

In materials science, creating room-temperature superconductors or ultra-efficient batteries by modeling matter at the atomic level.

In finance, optimizing investment strategies of a complexity inaccessible to current computers.

In ecology, designing catalysts capable of capturing carbon from the atmosphere or optimizing energy grids on a planetary scale.

In fundamental physics, unlocking the mysteries of dark matter or quantum gravity.

The record set by Caltech is therefore not an end in itself. It is the beginning of a new chapter, tangible proof that we are no longer in the realm of science fiction. The road ahead is still long before such computers leave the laboratories, but one thing is certain: we have never been closer to witnessing the birth of powerful, functional, and reliable quantum machines. The dawn of the quantum era is breaking, and its light promises to transform our world.

The Quantum Mirage: Are We Betting Billions on the Wrong Computing Revolution?

For decades, we've been told a story about the future. It’s a story of ultimate computational power, of problems once deemed unsolvable succumbing to the bizarre and wonderful logic of the quantum realm. Quantum computing, we are promised, will revolutionize medicine, create new materials, break an entire generation of cryptography, and reshape our worl…

AI Is Killing the Web, and We're Looking Away: Chronicle of a Death Foretold.

Imagine the scene. Tomorrow morning, you wake up, grab your coffee, and open your laptop to check your favorite news sites, read a few blog posts, or browse a niche forum. But instead of the familiar content, you find only blank pages, 404 error messages, and the deafening silence of a digital graveyard

Beyond Reach: How a Quantum Processor Unlocked a "Forbidden" Phase of Matter.

In the strange and counterintuitive world of quantum mechanics, there are phenomena so complex, so deeply entangled, that they lie beyond the horizon of our most powerful supercomputers. These are not merely difficult calculations; they represent entire realms of physics that, until now, have existed only in the abstract language of theoretical equation…

According to quantum mechanics, things can have properties that take on more than one value simultaneously - but when you measure that property, they instantly "choose" one of those values. This idea can also be applied to pairs of things, explained in more detail below. An object is said to be entangled with another when it has some property that takes on multiple values in a way that depends on the other object. The instantaneous "choosing" of one value (called "collapse") has interesting implications. It means that by measuring one of the entangled objects, you can cause the other object to instantly "choose" a particular value, wherever it is. In some sense, this could be called transmitting information faster than the speed of light. But, there is a (big) catch: we have no way of controlling which outcome the measured object will choose. In this sense, you could say that entanglement allows you to transmit random information faster than light. This is perhaps not useful.

Long answer: I will give an example of quantum entanglement. But first, some basic principles of quantum mechanics. An object is described by its quantum state. For the simple example of a photon, its state tells you where the photon is in space, its momentum, and its polarization. In describing the photon's state, however, you don't just give a number for each of those quantities, you essentially give a probability for getting a particular outcome if you were to measure them. (If you recall the uncertainty principle, this means that the narrower the probability distribution of position, the wider the probability distribution of momentum). For now, we'll ignore position and momentum, and just consider polarization.

Two possible states of a photon's polarization could be horizontal, denoted |H>, or vertical |V>. So the polarization state could generally be written as a certain probability of |H> and a certain probability of |V>, written a|H>+b|V>. (Technically, the probability of measuring |H> is |a|^2 and the probability of measuring |V> is |b|^2, where a and b may be complex and |a|^2+|b|^2 = 1)

The photon state, described by a|H>+b|V> should be understood to mean that the photon is simultaneously polarized both horizontally and vertically. This is an important point: In quantum mechanics, something can have parameters that take on two (or more) values at the same time (be in two places, have two energies, have two polarizations, etc.) Once you measure the system, then one of the options is instantly chosen, and then the parameter that you have measured has a well-defined value. (If this sounds weird, it is, and it is not known why this happens.)

OK, on to entanglement finally. Consider the state of a pair of photons. It turns out that it is possible to generate a pair of photons whose probabilities for |H> and |V> depend on the others probabilities. An example of such a state could be written a|H>|V>+b|V>|H>, where |a|^2 and |b|^2 are the probabilities of |H>|V> and |V>|H> respectively. Here, |H>|V> means the situation where photon 1 is |H> and photon 2 is |V>.

The state a|H>|V>+b|V>|H> means that the pair of photons is simultaneously in the situation with (photon 1 |H> and photon 2 |V>) and the situation with (photon 1 |V> and photon 2 |H>).

So what happens if you measure one of the photons? Say you measure the polarization of photon 1. You get either |H> or |V> as your result. You could get either, only the probabilities are given by |a|^2 and |b|^2. Say you measure |H>. Now the state of the pair of photons immediately collapses into |H>|V>. On the other hand, if you measure |V>, the state immediately becomes |V>|H>.

This is quite odd. As far as we know, this collapse of the state happens instantaneously, no matter how far apart the two photons are. But can it be used to transmit information?

The idea for a communication device would be to generate a pairs of entangled photons in the state (|H>|V>+|V>|H>) in your lab, send one of them through an optical fiber to Bob on the other side of the world, and send the other into an optical fiber of the same length inside your lab. When your photon comes out the other end of the fiber, you measure its polarization. The protocol is that a |H> photon is a 1 and a |V> photon is a 0. Let's say you measure the first 8 photons to come out and get 01101010. You know, that at that moment, on the other side of the world, Bob is measuring 10010101. You could say that you instantaneously sent the message "10010101" - the only problem is that you had no control over what the message was. It was totally random. This is a general problem with transmitting information using entanglement - the whole idea is based on this quantum indeterminacy. As far as we know there is no way around it.

That’s an incredible milestone 6,100 qubits isn’t just progress, it’s a whole new chapter. Caltech didn’t just scale up; they proved that quantum computing’s next era is no longer theory it’s happening right now.