Rather Than Embracing the AI Revolution, Apple Prefers to Disparage Its Competitors’ Most Popular Models.

A study by Apple seeks to demonstrate that AI models such as Claude, DeepSeek-R1, and o3-mini do not reason at all.

Everyone has noticed that Apple has already fallen behind its competitors in the field of AI. Apple is not alone in this situation, as Facebook is also experiencing similar issues. That's why Mark Zuckerberg has decided that Meta will make a “significant” investment in the artificial intelligence start-up Scale AI, valuing it at $29 billion, a sign that Facebook's parent company is stepping up its efforts in AI. Meta is spending $14 billion on the deal in an attempt to catch up.

As part of the agreement announced on Thursday, June 12, 2025, Scale AI CEO Alexandr Wang will join Meta “to participate in our work on superintelligence,” according to the group led by Mark Zuckerberg.

While Facebook has decided to take the bull by the horns, Apple does not seem to be willing to invest heavily at this time. Instead, Apple appears to want to discredit the reasoning abilities of the most popular AI models currently available.

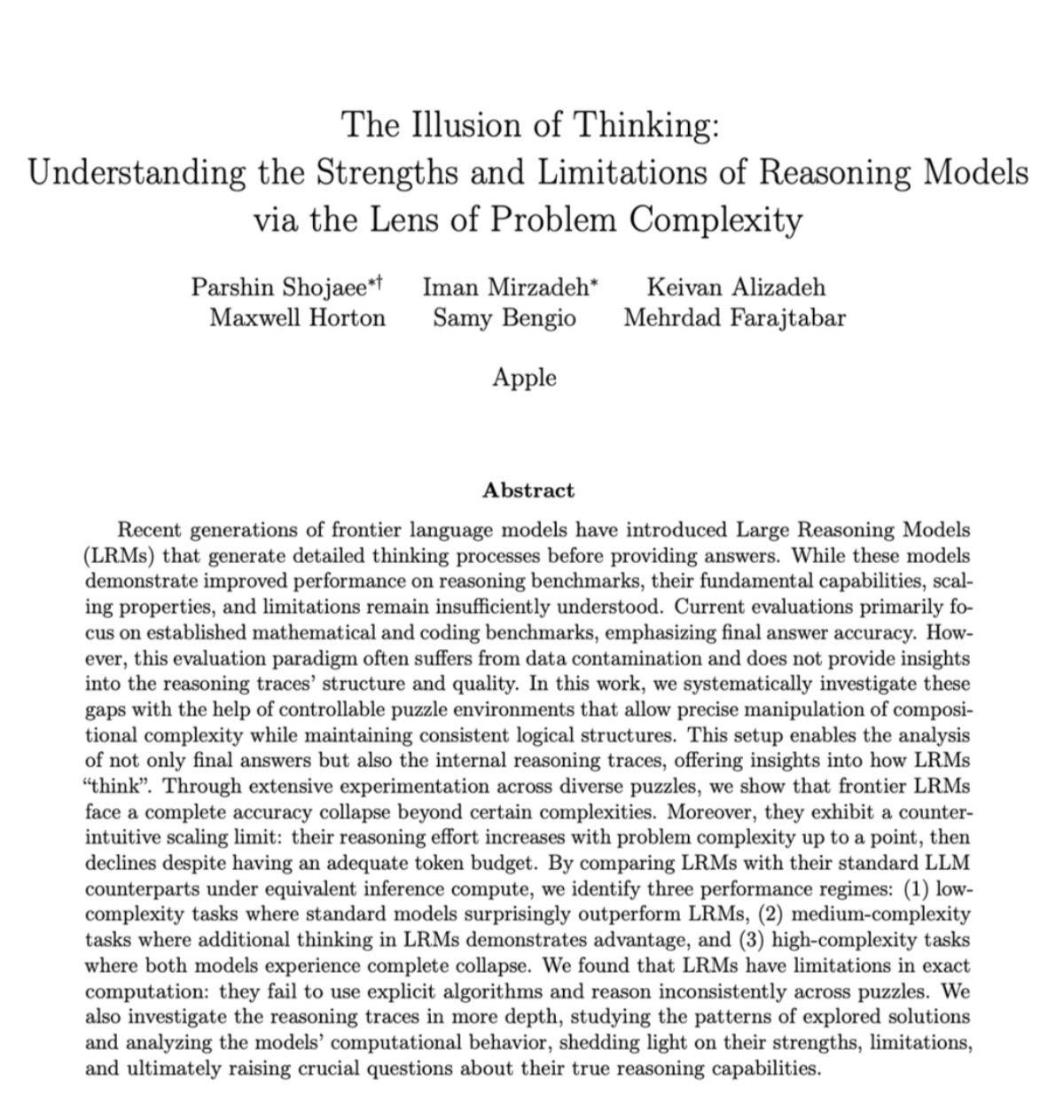

In a recent study entitled “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity,” Apple examined the reasoning abilities of models such as Claude, DeepSeek-R1, and o3-mini. Apple even claims to have discovered that these models do not reason at all, but merely impressively memorize patterns.

Instead of relying on the same classic mathematical tests that AI companies often boast about, Apple has developed new puzzle games. They tested Claude Thinking, DeepSeek-R1, and o3-mini on problems that these models had never encountered before.

The result is enlightening:

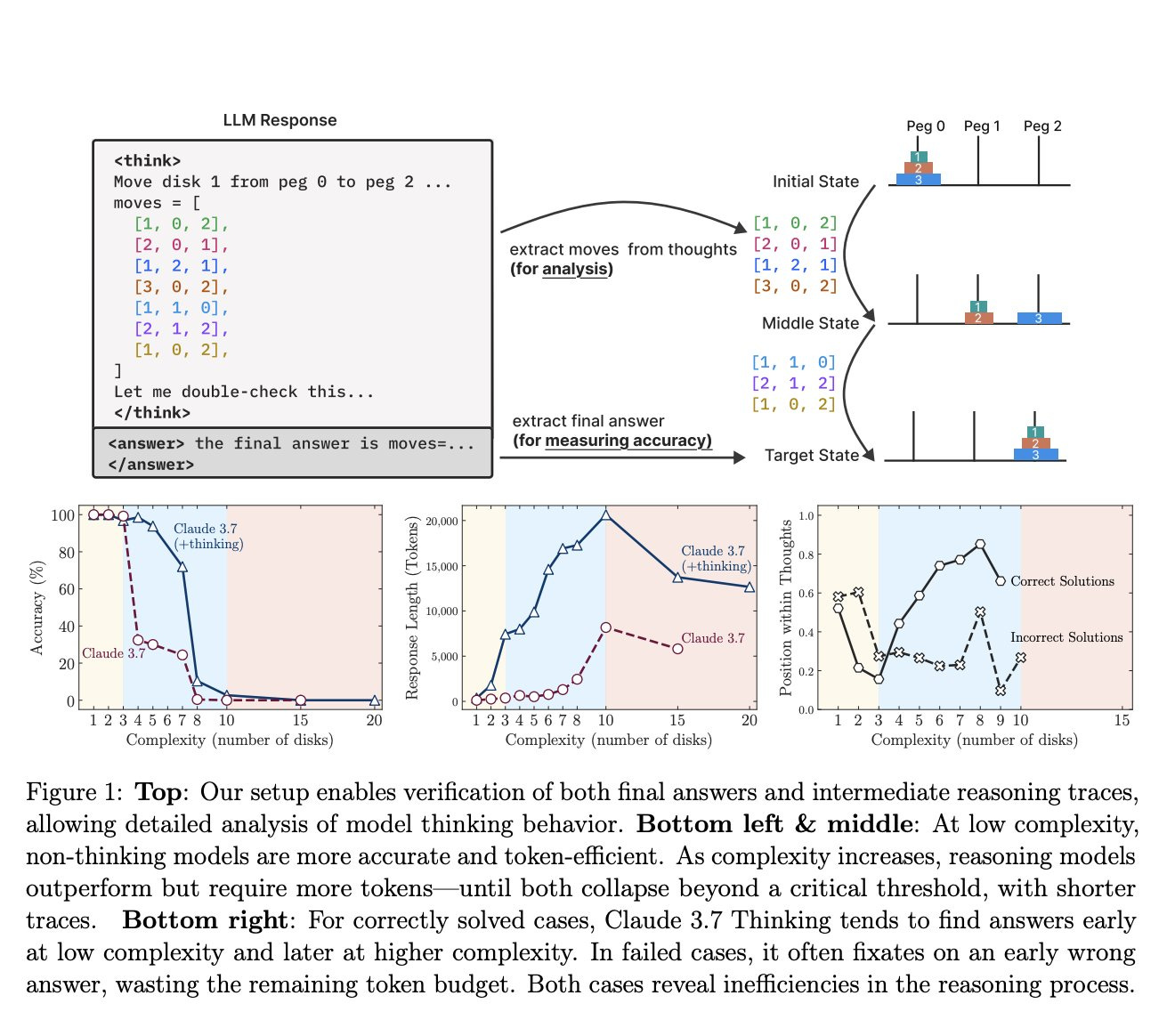

All “reasoning” models hit a wall of complexity where they completely break down, reaching 0% accuracy. No matter how much computing power you give them, they cannot solve more complex problems.

As problems became more complex, these “thinking” models began to think less. They used fewer tokens and gave up more quickly, despite having unlimited resources.

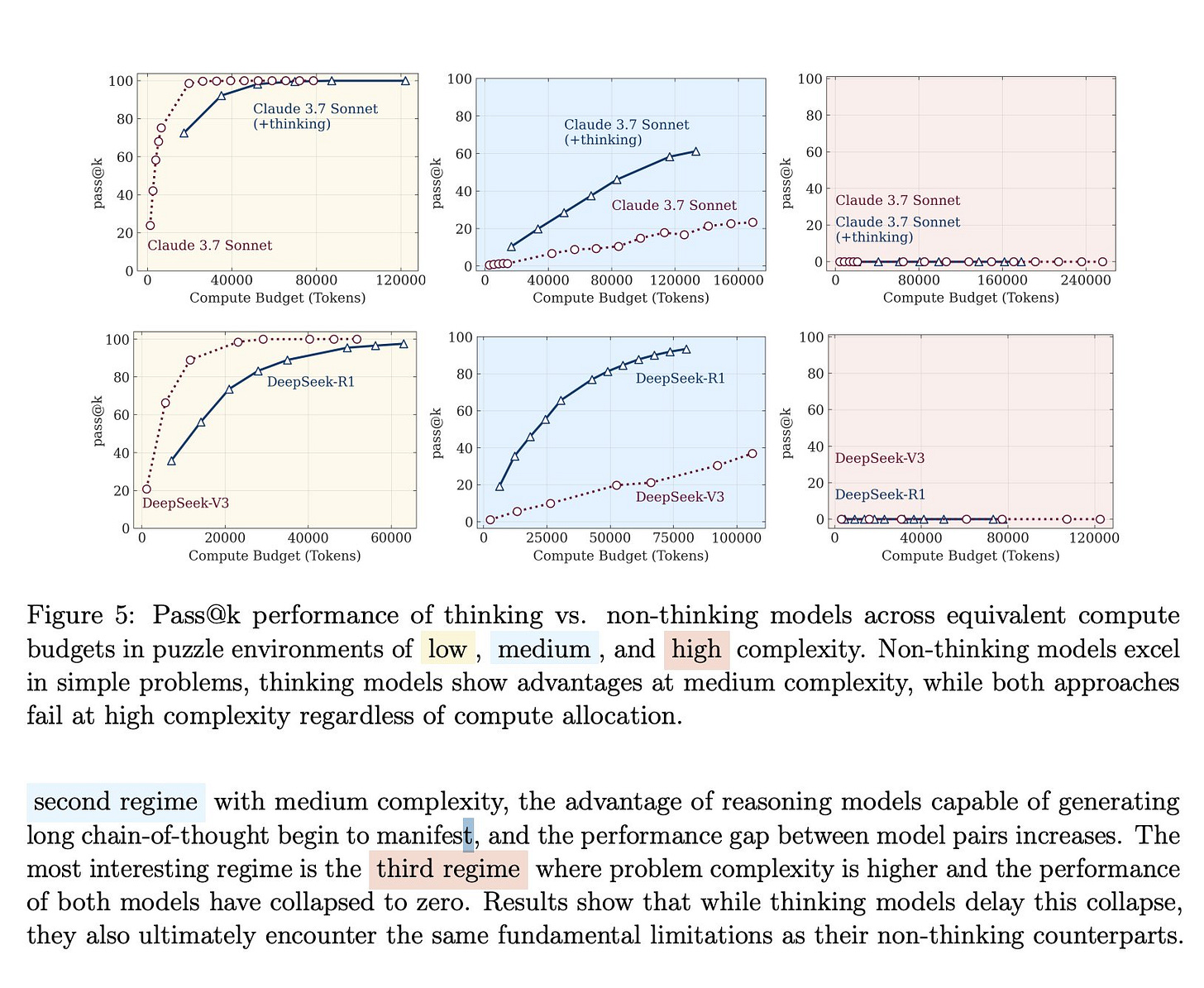

Apple researchers even tried giving the models the exact solution algorithm. It was like giving someone step-by-step instructions for baking a cake. The models still failed at the same levels of complexity. They couldn't even follow the instructions consistently.

Apple's study revealed three regimes:

Low complexity: classic models are real winners.

Medium complexity: “Thinking” models have an advantage.

High complexity: everything collapses completely.

Most problems fall into this third category.

Apple discovered that these models do not reason at all, but rather perform sophisticated pattern matching that works perfectly until the patterns become too complex.

They then collapse like a house of cards!

If these models were truly “rational,” they should improve with more computation and clearer instructions. Instead, they hit roadblocks and start to give up.

Is it intelligence or memorization that is reaching its limits?

This research suggests that we are not as close to general AI as we claim to be. Current advances in “reasoning” may encounter fundamental obstacles that cannot be overcome by simply adding data or calculations.

The models could handle more than 100 moves in the Tower of Hanoi puzzles, but failed after only 4 moves in the River Crossing puzzles. This suggests that they memorized the solutions to the Tower of Hanoi during training, but are not capable of reasoning.

While AI companies tout the “thinking” of their models, Apple has stated:

“Everyone is celebrating fake reasoning.”

The industry is looking for metrics that don't measure real intelligence.

Apple researchers used controllable puzzle environments specifically because:

They avoid data contamination.

They require pure logical reasoning.

They allow for precise scalability of complexity.

They reveal the breaking points of models.

This is a smart experimental design, in my opinion. I invite you to read this fascinating study for yourself:

What do you think?

Is Apple simply trying to disparage the competition because it has been overtaken in AI development over the past two years?

Or is it right?

The answer could also lie somewhere in between.

Let me know what you think in the comments.

Who Will Pay the Accurate Price of Artificial Intelligence?

Is the party in the AI world about to end?

Artificial Intelligence and Cryptocurrencies: A Revolutionary Alliance That Promises New Uses and Innovations.

It's an understatement to say that the field of artificial intelligence took a radical turn in November 2022 with the advent of ChatGPT. This artificial intelligence, based on a type of model known as LLM (Large Language Model), revolutionized the field. Since then, LLMs and other generative AIs have gone from strength to strength. Unsurprisingly, the l…

Thanks for putting that together. This was very insightful.