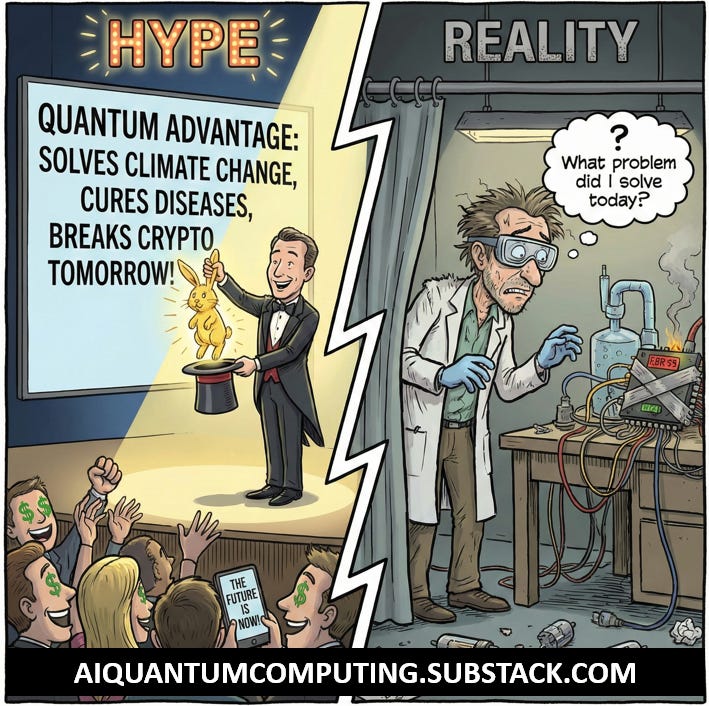

Quantum Computing: The Great Illusion of Progress.

Separating the Billion-Dollar Hype from the Zero-Utility Reality.

Every few months, the cycle repeats. The tech world is jolted awake by a press release designed to trigger both awe and Fear Of Missing Out (FOMO). The headlines scream in bold typeface:

“6,000 Qubits Achieved!”

“Quantum Advantage: The Threshold Has Been Crossed!”

“The Revolution is Here: Classical Computing is Dead!”

Venture capital flows like wine; government policymakers scramble to draft “national quantum strategies”; and LinkedIn feeds fill with breathless commentary about how the world has fundamentally changed overnight.

But if you strip away the sleek marketing videos, the artist’s renditions of gold-plated chandeliers, and the corporate press kits, and you ask a brutally simple question, the silence is deafening.

What exactly was solved today? What real-world problem has been fixed?

The answer, if we are brave enough to face it, is uncomfortable. We are currently living through a period of intellectual malpractice. We have conflated scientific curiosity with industrial readiness. We have confused complexity with utility. And in doing so, we are risking the credibility of one of the most beautiful fields in physics.

It is time to stop lying to ourselves. It is time to dissect the hype, layer by layer, and look at the naked reality of quantum computing as it stands today.

The Phantom Bitcoin Tax: Why the Netherlands Just Declared War on the Future of Wealth.

How the dangerous proposal to tax unrealized gains will destroy innovation, punish savers, and drive capital into the arms of the competition.

1. The Numerology of Qubits: Why Count != Compute

The primary metric for the quantum arms race is the “Qubit Count.” It is the easiest number for a layperson to understand. If Company A has 50 qubits and Company B has 1,000, Company B must be winning, right?

This is a dangerous fallacy.

The current generation of machines operates in the NISQ era (Noisy Intermediate-Scale Quantum). In this regime, quoting the number of physical qubits is meaningless without context regarding their quality.

The Fragile Analog Nightmare

A classical bit is a fortress of stability. It is either a 0 or a 1. A physical qubit, however, is a fragile analog system trying to maintain a coherent quantum state against a universe that desperately wants to measure it and force it to decohere.

When a headline boasts of “thousands of neutral atoms” or “hundreds of superconducting qubits,” they are describing physical entities that are:

Prone to Gate Errors: Every operation performed on these qubits introduces noise.

Short-lived: They lose their quantum information in microseconds or milliseconds.

Not Fault-Tolerant: There is currently no mechanism running at scale to correct these errors in real time without destroying the computation.

Running a shallow circuit on 6,000 noisy qubits is not a computation; it is a calibration test. If the error rate is high, adding more qubits actually makes the system worse, not better, because you are simply increasing the probability of a catastrophic failure in the calculation.

The Reality: Until we have Logical Qubits—where thousands of physical qubits are stitched together to form a single, error-corrected unit—qubit counts are merely vanity metrics. We are counting the bricks in a pile and calling it a cathedral.

2. The “Quantum Advantage” Mirage

The term “Quantum Advantage” (or Quantum Supremacy) is the Holy Grail of the field. It defines the moment a quantum computer performs a task that is impossible for a classical computer.

Google claimed it in 2019. Others have claimed it since. The narrative is always the same: “This task took our quantum processor 200 seconds, but would take a classical supercomputer 10,000 years!”

But let’s look at the “task.”

Random Circuit Sampling (RCS)

The benchmark used for these claims is almost exclusively Random Circuit Sampling. The computer is asked to sample from the probability distribution of outputs generated by a random quantum circuit.

Who needs this? No one.

Does it simulate a molecule? No.

Does it optimize a logistics chain? No.

Does it crack a code? No.

It is a problem designed with a specific circular logic: it was chosen because it is hard for classical computers to simulate, not because the answer provides any value. It is a complexity-theory demonstration, akin to proving you can build a Rube Goldberg machine that is harder to model mathematically than a toaster.

The Moving Goalposts

Furthermore, the claim of “10,000 years” usually evaporates within months.

Algorithmic Improvement: Classical computer scientists, stung by the challenge, improve their algorithms (e.g., using tensor networks).

Hardware Utilization: They optimize the code for Summit or Frontier (massive classical supercomputers).

Suddenly, the “10,000-year” task can be done on a laptop in a few hours. The “Advantage” wasn’t a physical absolute; it was a temporary gap in software optimization.

The Reality: Performing a useless task slightly faster than a handicapped classical baseline is not a revolution. It is a parlor trick.

3. The “Useful Advantage” Claims: Chemistry and Optimization

This is where the marketing becomes predatory. While physicists might care about Random Circuit Sampling, investors care about drugs, batteries, and logistics.

You have likely read that quantum computers are “revolutionizing drug discovery” or “optimizing financial portfolios.”

If you dig into the footnotes of the papers making these claims, the illusion shatters.

The “Toy Model” Problem

When a paper claims to calculate the ground state energy of a molecule, look at the molecule. It is almost always something like:

Hydrogen (H2)

Lithium Hydride (LiH)

Perhaps a small chain of water molecules.

We solved the Schrödinger equation for these systems classically decades ago. We can simulate them on a pocket calculator. The quantum “solution” usually involves:

Heavy Classical Preprocessing: A classical computer does 90% of the work to simplify the problem.

Variational Algorithms (VQE/QAOA): The quantum computer acts as a noisy estimator within a classical feedback loop.

Barren Plateaus: As the problem scales, the optimization landscape becomes flat (a “barren plateau”), making it impossible for the algorithm to find the solution.

No Real-World Deliverables

There is not a single pharmaceutical compound in clinical trials today that was discovered by a quantum computer. There is no new battery cathode material derived from a QPU (Quantum Processing Unit). There is no truck route optimized by quantum annealing that couldn’t have been optimized better by a standard heuristic algorithm running on AWS.

The Reality: The moment you scale these problems to a size where they become industrially relevant, current quantum hardware drowns in noise. The moment you remove the artificial constraints, classical heuristics beat the quantum algorithms hands down.

4. The Hard Truth of Error Correction

To be fair, there is one area of genuine, rigorous progress: Quantum Error Correction (QEC).

This is the only path forward. However, we must be precise about where we are. We are currently demonstrating primitives.

We have shown that we can detect errors without measuring the data.

We have shown that increasing code distance can suppress errors (under very specific conditions).

But the overhead is punishing. To create one logical qubit that can run for a meaningful amount of time, we might need 1,000 physical qubits. To run a useful algorithm (like Shor’s algorithm for factoring), we need millions of physical qubits.

The “Magic State” Bottleneck

Furthermore, simply correcting errors isn’t enough. To perform the logic gates required for useful computation (non-Clifford gates), we need something called “Magic State Distillation.” This is a resource-hogging process that consumes massive amounts of qubits and time.

The Reality: We are currently in the engineering validation phase of the most basic components. We are building the vacuum tubes; we are not yet building the ENIAC, let alone the iPhone. Claiming we have a computer now is like claiming you have a functioning airline because you successfully tested a wind tunnel model of a wing.

5. Cryptography: The Fearmongering Machine

Perhaps the most dishonest narrative is the “Quantum Apocalypse”—the idea that RSA encryption is dead and your bank account is vulnerable today.

Let’s be absolutely clear:

RSA-2048: To break standard encryption, we need a quantum computer with roughly 20 million noisy physical qubits (assuming a surface code architecture).

Current Status: The largest number factored by a quantum computer using Shor’s algorithm is 15 (or 21, depending on how you define “cheating” with prior knowledge).

There are papers claiming to factor larger numbers using “quantum annealing” or “variational approaches,” but these are heuristic methods that do not scale. They are mathematical curiosities that offer no exponential speedup.

Shor’s algorithm is a masterpiece of theory. It exists on paper. It does not exist in reality. Anyone selling you “Post-Quantum Cryptography” solutions based on an imminent threat is selling you fire insurance for a house that hasn’t been built yet. Though to be fair, migrating to PQC is good hygiene for the long-term future, but the panic is manufactured.

6. The Danger of the Hype: Why This Matters

Why be such a killjoy? If the funding leads to better physics, who cares if the marketing is exaggerated?

We should all care. Overselling science is not a victimless crime.

1. The “Quantum Winter” Risk

History is littered with fields that promised the moon and delivered dust. Artificial Intelligence suffered two “winters” where funding evaporated because promises were not kept.7 If the quantum industry continues to promise drug discovery next year and fails to deliver for a decade, the capital will dry up. The serious researchers will be left unfunded because the venture capitalists felt burned by the charlatans.

2. Misleading Policy

Governments are allocating billions of dollars/Euros based on the premise that quantum computers will break encryption or solve climate change by 2030.8 If those resources are diverted from other vital technologies (like classical supercomputing, fusion, or biotech) based on false premises, we suffer a collective opportunity cost.

3. Erosion of Scientific Credibility

When reputable companies publish blog posts implying they have achieved the impossible, they erode the public’s trust in scientific institutions. Science relies on precision. When “complexity theory demonstration” is translated to “World Changing Breakthrough,” we lose the language of truth.

Final Thoughts: A Plea for Honesty

Quantum mechanics is the most successful theory in the history of physics. The effort to control it—to harness the entanglement of individual atoms—is a noble and awe-inspiring pursuit. The scientists working on error correction, materials science, and control theory are doing heroic work.

But we do not honor their work by lying about the results.

As of today, quantum computers have not solved a single new real-world problem that could not be solved classically. That is the baseline.

We have achieved: Better control. Incremental noise reduction. Proofs of principle.

We have NOT achieved: Industrial disruption. Practical computational advantage.

The path to a useful quantum computer is likely 10 to 20 years long. It is a marathon of physics and engineering. It is not a sprint of software and marketing.

If this reality makes you uncomfortable, good. That discomfort is the friction of the wheels finally touching the ground. Let’s stop looking at the headlines and start looking at the Hamiltonians. Let’s stop selling the sci-fi dream and start doing the hard, grinding engineering work required to make it real.

Science does not need mythology. It needs honesty.

The Quantum Computing Leap: Top 10 Stocks to Bet on the Revolution (2026 Edition).

From Theory to Trillions: Positioning Your Portfolio for the Greatest Technological Shift Since the Internet.