Focus on AlphaElvolve, Google DeepMind’s AI That Could Boost Scientific Research Like Never Before

A scalable platform that improves the performance of algorithms across a wide range of scientific fields.

Scientific discoveries and the development of new algorithms require long and complex processes of ideation, exploration, testing, and validation. In recent years, large language models (LLMs) have helped to significantly accelerate these processes. However, despite the development of increasingly powerful models, practical applications and the resolution of complex problems remain limited.

Most scientific applications of AI, such as Google DeepMind's AlphaFold protein design tool, have so far relied on algorithms specifically designed for a single domain of use. In other words, despite their performance, current algorithms are considerably limited in terms of versatility.

AlphaEvolve is designed to overcome this limitation by generating algorithms that can solve complex problems in a wide range of scientific fields. According to Mario Krenn, director of the Artificial Scientist Lab at the Max Planck Institute for the Science of Light in Erlangen, Germany, “AlphaEvolve is the first successful demonstration of discoveries based on general-purpose LLMs.”

Darkwire Enables Bitcoin Transactions Without the Internet, Using Radio Waves.

Discovery of a project in its early stages that shows great promise.

An evolutionary process adapted to many fields

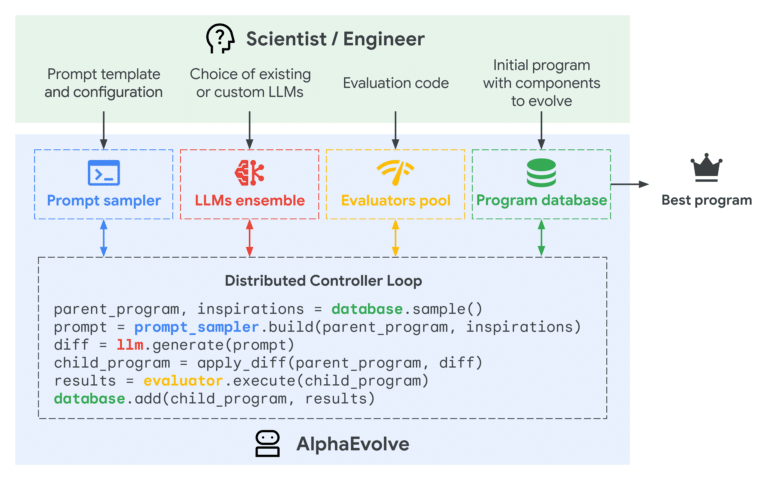

AlphaEvolve is based on Google DeepMind's Gemini range of LLMs. It is essentially an AI agent dedicated to programming, but more versatile than a standard Gemini-based chatbot. There is always a risk of hallucination when using the latter, as it is likely to invent details due to its probabilistic nature. In contrast, AlphaEvolve relies on a self-evaluation system to increase the accuracy of the algorithms it generates.

Each request begins with the entry of a question, evaluation criteria, and a suggested solution. The model then generates hundreds or thousands of possible modifications using Gemini Flash (which focuses on efficiency) and Gemini Pro (which focuses on accuracy). Each proposal is analyzed by an evaluation algorithm, comparing the modifications to the performance indicators of a relevant solution (e.g., for IT job assignments or reducing resource waste).

The LLM proposes new ideas based on the options considered optimal. An evolutionary process allows the tool to focus on the best ones and improve them according to user needs. It thus develops a range of more efficient algorithms over time, explains Matej Balog, AI scientist at Google DeepMind and co-director of research. “We are exploring this diverse set of possible solutions,” he says.

AlphaEvolve's evolutionary approach is based on the FunSearch system, a tool developed by the company to compete with humans in solving certain mathematical problems. However, AlphaEvolve can generate much larger programs and is not limited to mathematics.

In addition, the generative systems on which the technology is based are based on matrix multiplication operations. Developed in 1969 by mathematician Volker Strassen, this is the most efficient method for multiplying 4×4 matrices. More specifically, it involves multiplying numbers in 4×4 grids, a technique widely used for training neural networks.

However, according to Google DeepMind, AlphaEvolve has developed an even more powerful technique. Although intended for general use, the tool has reportedly surpassed AlphaTensor, a model specifically developed by the company for matrix mechanics. It could thus improve the efficiency of generative AI, an essential aspect for making the technology profitable.

Better computing capabilities for data centers

Google DeepMind engineers deployed AlphaEvolve at the level of their data center management system. The AI proposed improvements for the design of the next generation of tensor processing units (TPUs), specifically designed for complex machine learning tasks. It also suggested modifications that would improve the computing capacity of data centers and save 0.7% of total resources globally—a significant competitive advantage.

AlphaEvolve was also tested on around 50 mathematical problems, including areas such as combinatorial geometry. It managed to “rediscover” the most well-known answers in 75% of cases and propose improved solutions in 20% of cases.

However, although it requires fewer computing resources than AlphaTensor, it remains far too complex for public use at this stage. Nevertheless, the company plans to improve the tool to make it even more versatile and flexible. Google is planning an early access program for academics, as well as an expansion of capacity to handle a wide range of problems, including natural sciences.

JP Morgan, Citigroup, Bank of America, and Wells Fargo All Want a Piece (the Biggest!) of the Stablecoin Pie, Which Could Reach $2,000 Billion by 2028.

Tether has been warned: the American financial giants are entering the stablecoin race.

Quantum AI: A Technological Revolution at the Crossroads Between AI and Quantum Computing.

Quantum AI is at the heart of this newsletter, which I've been developing for a few months now, as it is the fruit of the encounter between quantum computing and artificial intelligence. Quantum AI promises to overcome the limitations of classical AI by harnessing the unparalleled power provided by qubits.

Artificial Intelligence and Cryptocurrencies: A Revolutionary Alliance That Promises New Uses and Innovations.

It's an understatement to say that the field of artificial intelligence took a radical turn in November 2022 with the advent of ChatGPT. This artificial intelligence, based on a type of model known as LLM (Large Language Model), revolutionized the field. Since then, LLMs and other generative AIs have gone from strength to strength. Unsurprisingly, the l…